Another year has passed, and Tesla’s Full Self-Driving system is still a really cool demonstration suite that creates more work than it replaces. Its predecessor, the highway-only Autopilot, is also back in the news for being associated with a fatal crash that occurred in Gardena, California, in 2019.

While no comprehensive review of the accident appears to be currently available to the public, it’s pretty easy to dissect what happened from news reports. Kevin George Aziz Riad was westbound in a Tesla Model S on the far western end of CA-91 (the Gardena Freeway) with Autopilot engaged just prior to the incident. While reports that Riad “left” the freeway could be misconstrued to imply he somehow lost control and drove off the side of the road, that’s not what happened; the freeway merely ended.

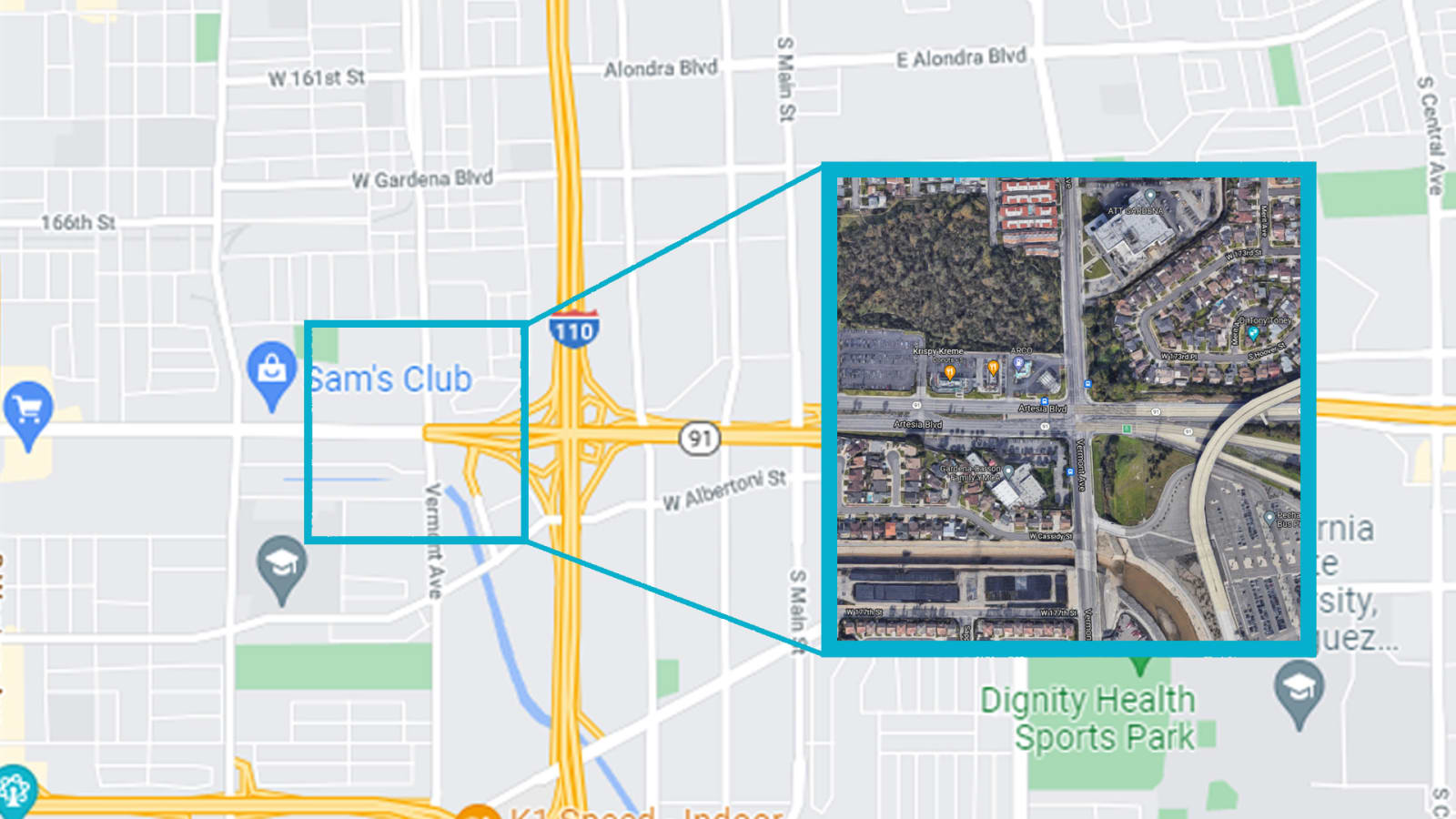

Like many of America’s incomplete urban freeways, the 91 doesn’t merely dead-end at the terminus of its route. Instead, it becomes a semi-divided surface street named Artesia Boulevard. Here’s what that looks like on Google Maps:

What precisely took place inside Riad’s Tesla as it traversed those final few hundred feet of freeway may forever remain a mystery, but what occurred next is well-documented. Riad allegedly ran the red light at the first north-south cross street (Vermont Avenue), striking a Honda Civic with Gilberto Alcazar Lopez and Maria Guadalupe Nieves-Lopez onboard. Both died at the scene. Riad and a passenger in the Tesla were hospitalized with non-life-threatening injuries.

When the 91-Artesia crash happened, Autopilot wasn’t a primary component of the story. That came more recently, when authorities announced that Riad would face two charges of vehicular manslaughter — the first felony charges filed against a private owner who crashed while utilizing an automated driving system. But is Autopilot really the problem in this case?

Autopilot is a freeway driving assist system. While it can follow lanes, monitor and adjust speed, merge, overtake slower traffic and even exit the freeway, it is not a full self-driving suite. It was not meant to detect, understand or stop for red lights (though that functionality did appear after the 91-Artesia crash). Freeways don’t have those. So, if Autopilot was enabled when the accident happened, that means it was operating outside of its prescribed use case. Negotiating the hazards of surface streets is a task for Tesla’s Full Self-Driving software — or at least it will be when it stops running into things, which Tesla CEO Elon Musk has predicted will happen each year for the past several.

In the meantime, situations like this highlight just how large the gap is between what we intuitively expect from self-driving cars and what the technology is currently capable of delivering. Until Riad “left” the freeway, letting Autopilot do the busy work was perfectly reasonable. West of the 110, it became a tragic error, both life-ending and life-altering. And preventable, with just a little attention and human intervention — the two very things self-driving software seeks to make redundant.

I wasn’t in that Tesla back in 2019. I’m not sure why Riad failed to act to prevent the collision. But I do know that semi-self-driving suites require a level of attention that is equivalent to what it takes to actually drive a car. Human judgment — the flawed, supposedly unreliable process safety software proposes to eliminate — is even more critical when using these suites than it was before, especially given the state of U.S. infrastructure.

This incident makes a compelling argument that autonomous vehicles won’t just struggle with poorly painted lane markings and missing reflectors. The very designs of many of our road systems are inherently challenging both to machine and human intelligences. And I use the term “design” loosely and with all respect in the world for the civil engineers who make do with what they’re handed. Like it or not, infrastructure tomfoolery the likes of the 91/110/Artesia Blvd interchange is not uncommon in America’s highway system. Don’t believe me? Here are four more examples of this sort of silliness, just off the top of my head:

If you’ve ever driven on a major urban or suburban highway in the United States, you’re probably familiar with at least one similarly abrupt freeway dead-end. More prominent signage warning of such a major traffic change would benefit drivers, both human and artificial alike (provided the AI knows what they mean). And many of those freeway projects never had any business being authorized in the first place. But those are both (lengthy, divisive) arguments for another time. So too is any real notion of culpability from infrastructure or self-driving systems. Even with the human “driver” removed completely from the equation in this instance, neither infrastructure nor autonomy would be to blame, in the strictest sense. They’re merely two things at odds — and perhaps ultimately incompatible — with each other.

In the current climate, Riad will be treated like any other driver would (and should) under the circumstances. The degree of responsibility will be determined along the same lines it always is. Was the driver distracted? Intoxicated? Tired? Reckless? Whether we can trust technology to do the job for us is not on trial here. Not yet. There are many reasons why automated driving systems are controversial, but the element of autonomy that interests me the most, personally, is liability. If you’re a driver in America, the buck generally stops with you. Yes, there can be extenuating circumstances, defects or other contributing factors in a crash, but liability for a crash almost always falls at the feet of the at-fault driver — a definition challenged by autonomous cars.

I previous joked that we might eventually bore ourselves to death behind the wheel of self-driving cars, but in the meantime, we’re going to be strung out on Red Bull and 5-Hour Energy just trying to keep an eye on our electronic nannies. It used to be that we only had ourselves to second-guess. Now we have to manage an artificial intelligence which, despite being superior to a human brain in certain respects, fails at some of the most basic tasks. Tesla’s FSD can barely pass a basic driver’s ed driving test, and even that requires human intervention. Tesla even taught it to take shortcuts like a lazy human. And now there’s statistical evidence that machines aren’t any safer after all.

So, is the machine better at driving, or are we? Even if the answer were “the machine” (and it’s not), no automaker is offering to cover your legal fees if your semi-autonomous vehicle kills somebody. Consider any self-driving tech to be experimental at best and treat it accordingly. The buck still stops with you.

Source: www.autoblog.com